Source: Ben Goertzel, "The Power of Decentralized AI," Rise of AI Conference (2019); talk available online.

The Context.

Upon listening to Goertzel's 2019 keynote at the Rise of AI Conference, I began to ask myself some questions, for which I do not presently have clear hypotheses.

Some of these questions may be silly for those steeped in the AI space; if so, please do let me know + point me to strong sources for my further research & development. I have much work to do.

And so, I have much work to do. Some questions:

- How do we assess the dystopian risks with more generalized AI and a possibility of artificial SI ("post human" super intelligence)?

- What are our "planetary hedges" against black swan risk classes?

- How do we work now so that AI we are creating serves not only to deliver maximum benefit to people but also serves as the appropriate seed or "child form" for super intelligence that may unfold in the next decades and centuries?

- To what extent can decentralized governance (and how is this governance structure instantiated?) over global AI networks bias the next phase of AI development in positive directions?

- In what ways, and over what areas of the AI stack broadly, is a less decentralized coordination structure possibly beneficial?

- Indeed, how do we as a society define the positive meta narrative that can organize us toward a (protopian?) shared ethic?

And perhaps a personal question that grounds some of the above: how can I deploy my skill stack to contribute positively toward these issues that pose x-risk? 🤔

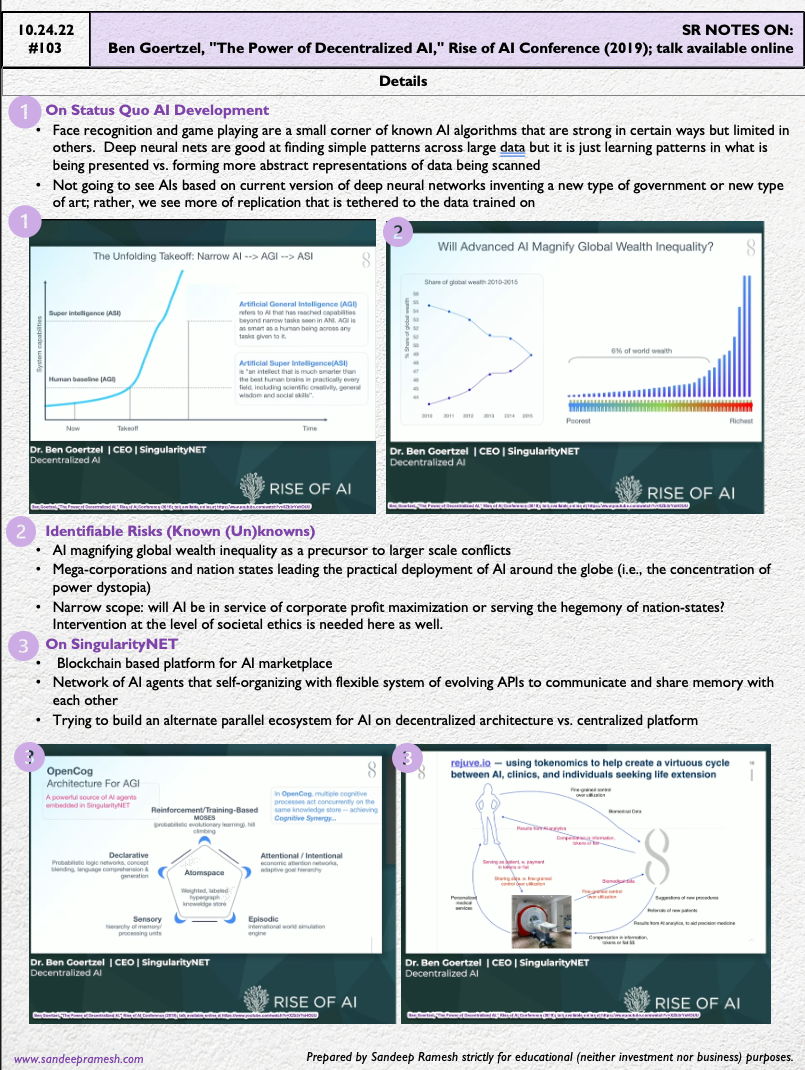

The Details.