Source: Friston, Ramstead, Kiefer et al., "Designing Ecosystems of Intelligence from First Principles," arXiv: 2212.01354 (Dec. 2022)

The Context.

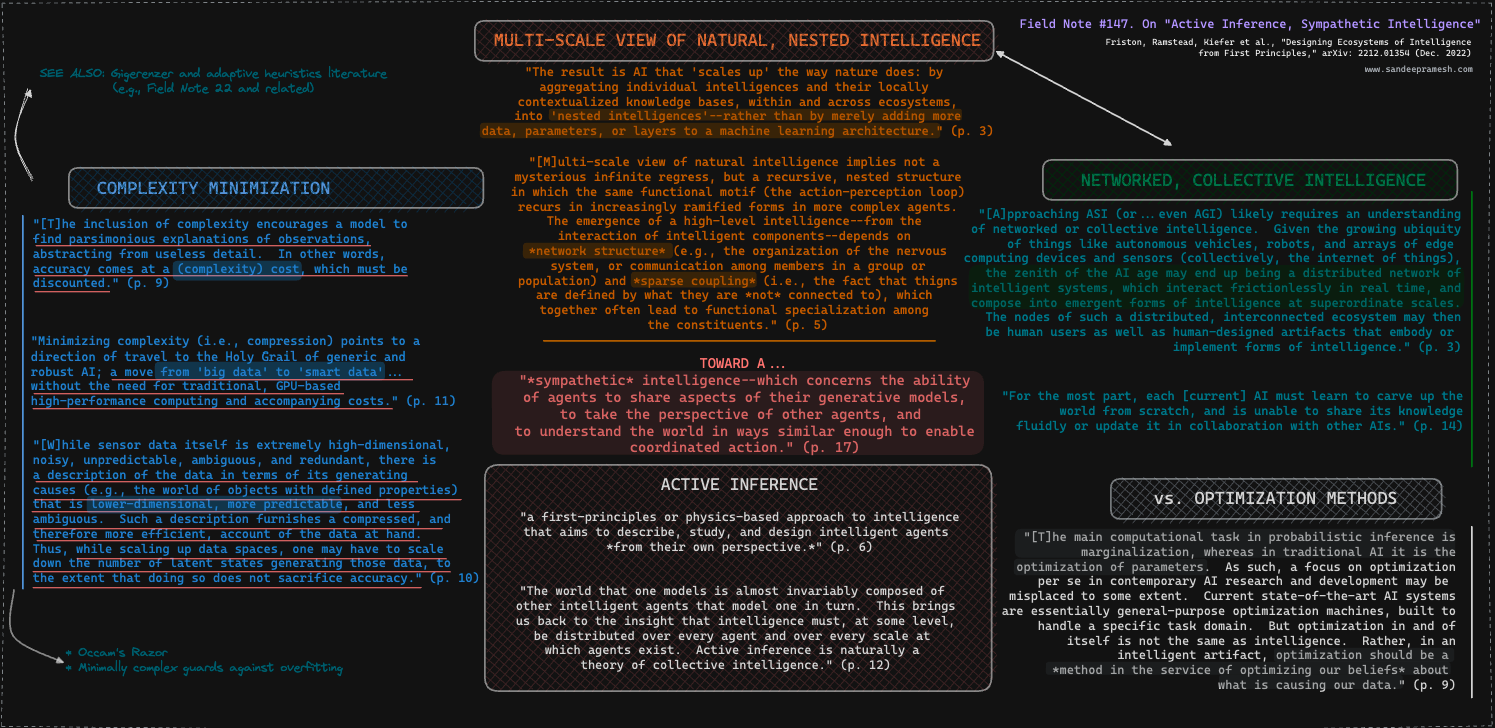

Against the siloed approach to AI development and deployment, characterized by its narrow and overly simplistic "problem/solution" and "bug/fix" orientations, a compelling claim emerges:

"[A]pproaching ASI (or...even AGI) likely requires an understanding of networked or collective intelligence. Given the growing ubiquity of things like autonomous vehicles, robots, and arrays of edge computing devices and sensors..., the zenith of the AI age may end up being a distributed network of intelligent systems that interact frictionlessly in real time and compose into emergent forms of intelligence at superordinate scales" (Friston, Ramstead, Kiefer et al., 3).

At stake is the concept of "sympathetic intelligence," which involves the ability of agents to share aspects of their generative models, take the perspective of other agents, and understand the world in ways similar enough to enable coordinated action (Id. at 17).

Exploring more of this literature and engaging in personal experiments (soon to be in development) will undoubtedly contribute to our understanding of these concepts.

Additional keyword breadcrumbs:

- variational free energy (reminiscent of the idea of humans as "entropy reduction machines")

- self-evidencing, (system actively seeks and generates evidence that supports its own predictions or hypotheses)

- vector-based representation of human knowledge (which perhaps "raises the question whether natural languages can really play the role of a shared human-machine language without modification" (id. at 14))

The Map.