Source: Neil Thompson et al., "The Computational Limits of Deep Learning," avail. online at arXiv (July 2022)

The Main Idea: Computational Gauntlet.

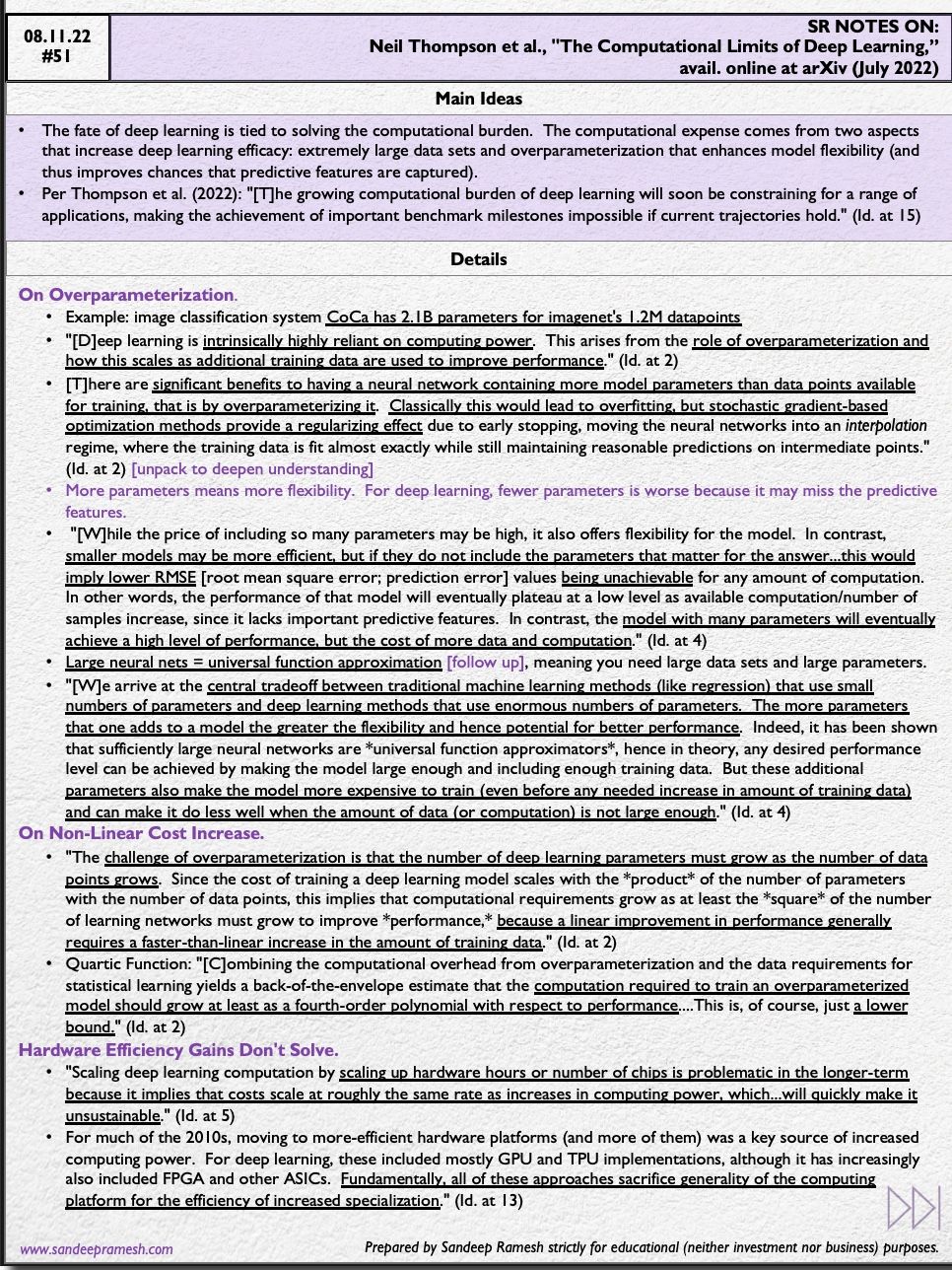

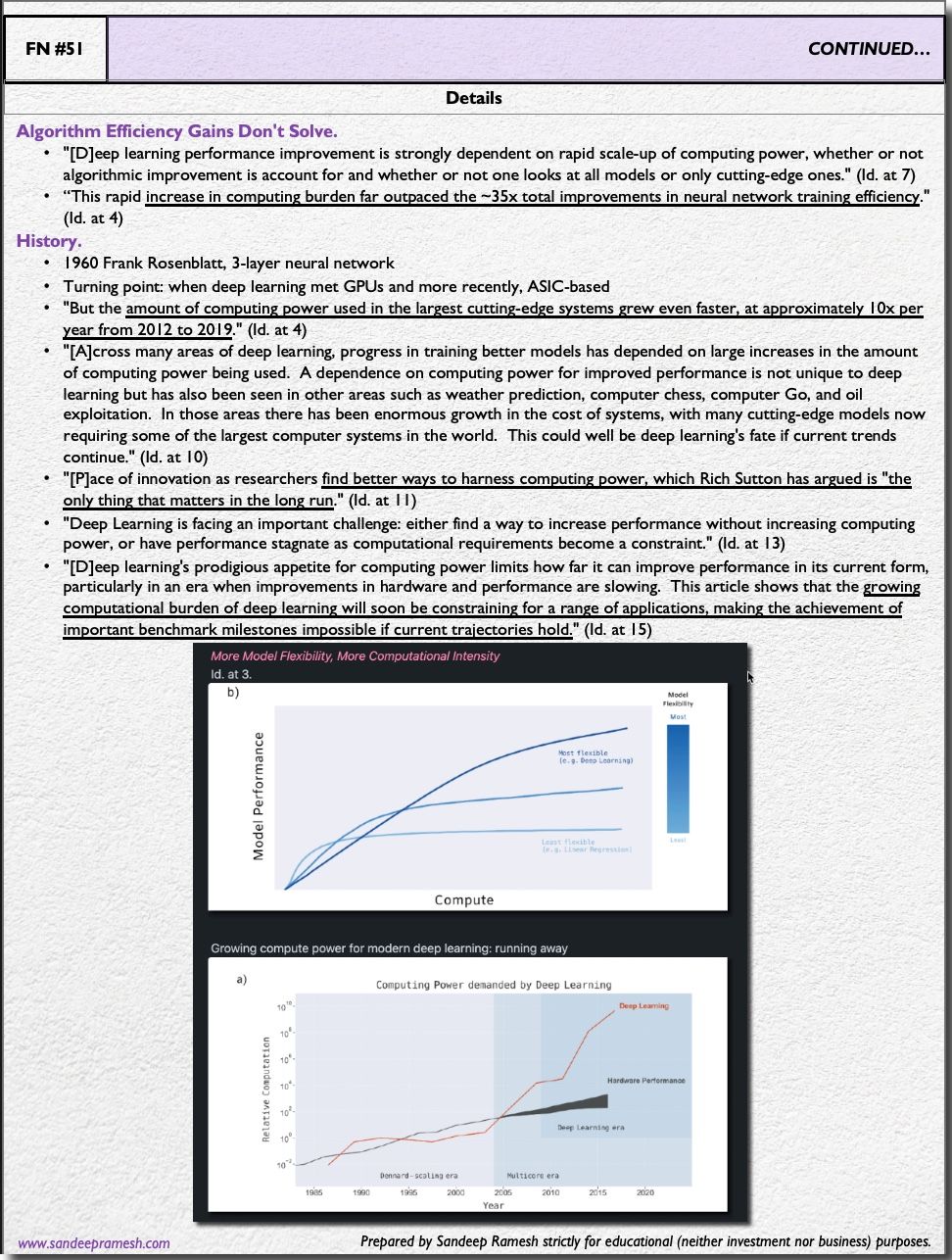

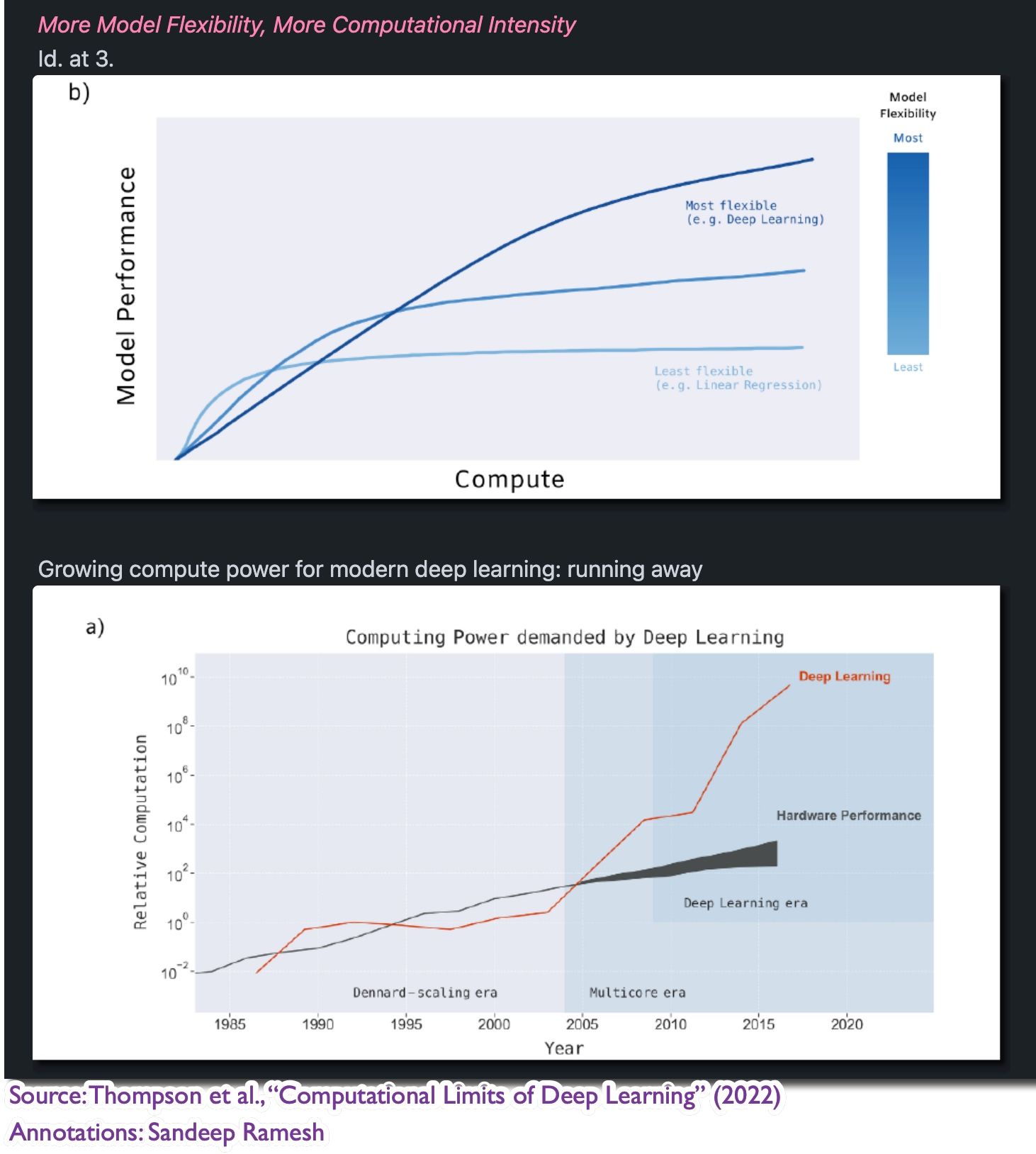

The fate of deep learning is tied to solving the computational burden. The computational expense comes from two aspects that increase deep learning efficacy: extremely large data sets and overparameterization that enhances model flexibility (and thus improves chances that predictive attributes are captured).

"[T]he growing computational burden of deep learning will soon be constraining for a range of applications, making the achievement of important benchmark milestones impossible if current trajectories hold." (Id. at 15)

The Visuals.

The Details.